Deep Vendor Reviews Are Now a Requirement Under DORA

Deep Vendor Reviews Are Now a Requirement Under DORA

Your vendor risk program is a joke. You're collecting paperwork but not reducing risk. Those questionnaires and certifications you're hoarding are only giving you a false sense of security while your critical vendors are creating backdoors into your systems.

DORA changes everything. Regulators aren't asking if you’ve done the work anymore. They're also demanding proof that your vendors, especially those handling your crown jewels, are secure at a technical level. And no, that SOC 2 report collecting dust in your SharePoint won't save you when attackers move through your supply chain.

Table of Contents

- Vendor risk management is broken

- What DORA really expects from you

- How to run deep vendor security reviews that actually reduce risk

- How to operationalize deep vendor reviews

- Stop pretending paperwork protects you

Vendor risk management is broken

You know the drill. Send a questionnaire, wait weeks for responses, check if they have SOC 2, then rubber-stamp the approval. Congratulations, you've done absolutely nothing to reduce actual risk.

Paper-based reviews don't reduce risk

Vendors tell you what you want to hear, and you pretend to believe them. Meanwhile, their API keys are hardcoded in GitHub, their S3 buckets are public, and their developers are pushing code without reviews.

Remember when SolarWinds did everything right? Their customers did too. Then attackers compromised their build system and pushed malicious code to 18,000 organizations. Paperwork didn't stop that breach, but technical validation would have.

Checklists create an illusion of safety

Checklists confirm that a policy exists; they don’t confirm the policy works. A vendor can pass SOC 2 Type II and still:

- Grant over-privileged service tokens because least privilege isn’t enforced in CI/CD.

- Miss lateral movement because workload logs aren’t joined with IdP events.

- Leak data via internal-only test environments connected to production data.

None of that shows up in a generic questionnaire. It shows up when you review architecture, roles/permissions, logging coverage, and real incidents.

I’ve worked with a payments fintech company that integrated a SaaS analytics tool via OAuth with broad read/write scopes. The vendor had ISO 27001 and SOC 2; due diligence was a 300-question survey plus the reports. Months later, the vendor’s build system leaked an access token. An attacker used the vendor’s integration to query transactions and trigger a small set of fraudulent refunds - no direct breach of the fintech’s systems, but real loss and reporting obligations.

Here’s what the paperwork missed:

- Over-privileged integration: scopes allowed refund initiation, not just analytics reads.

- Weak workload isolation at the vendor: shared runners with permissive secrets access.

- No anomaly detection on vendor calls: the fintech didn’t baseline call patterns from that integration, so high-risk API usage looked normal.

- No contractual audit path to logs: pulling vendor evidence for the post-incident review took weeks, extending customer impact and regulator scrutiny.

A deep review would have forced least-privilege scopes, verified vendor build-pipeline controls, required joined telemetry (IdP, API, workload), and set audit-log delivery expectations in the contract.

Compliance isn't security

Compliance frameworks are the minimum baseline. They tell you if a vendor met a standard at a specific point in time, but not if they're secure today.

Regulators are forcing the shift from trust → verify

Under DORA, you must demonstrate operational resilience across your ICT supply chain. That means you:

- Classify critical and important functions and identify the vendors that underpin them.

- Secure contractual rights to access, test, and audit relevant controls and logs.

- Show ongoing monitoring, instead of one-off reviews - coverage of identity, data, change, and workload telemetry tied to those vendors.

- Include vendors in scenario testing and recovery exercises where their failure impairs your critical services.

In short, regulators are asking for evidence that you can see, manage, and recover from third-party failures.

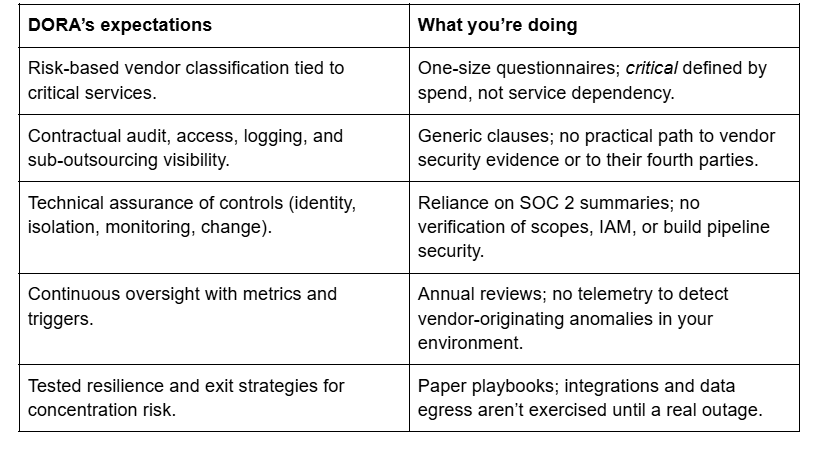

Mapping DORA's expectations vs. today's vendor management practices

What DORA really expects from you

DORA expects you to prove (continuously) that the vendors behind your critical or important functions are controlled, monitored, and replaceable without breaking your business. That means moving from annual audits to live oversight, backed by contracts that give you evidence on demand and the rights to test, audit, and exit.

Continuous oversight

That yearly vendor assessment you're so proud of? It's obsolete the moment you complete it. DORA demands continuous monitoring of your critical vendors, especially those providing ICT services.

Article 28 obligations: Ongoing monitoring

Article 28 is explicit: manage ICT third-party risk as part of your ICT risk management, keep the register current, and ensure you can demonstrate control effectiveness across the relationship lifecycle. And make sure to treat this like operational telemetry. If a vendor supports a critical or important function, your oversight has to be continuous and risk-based.

Your current quarterly or annual review cycle just simply won’t work anymore. By the time you detect a vendor vulnerability through traditional methods, chances are, attackers have already been exploiting it for months.

Technical oversight of ICT third parties

Article 30 requires contracts that grant you rights to information, access, audits, security metrics, incident notifications, service levels, data location/portability, sub-outsourcing visibility, and termination/exit. Use those rights to obtain logs, test recovery, and validate least-privilege access in real environments, then measure it on a cadence. Supervisors and recent ECB guidance expect this level of proof, especially for cloud.

What good looks like:

- You receive vendor telemetry (identity, API, workload, and change logs) mapped to your controls and playbooks.

- You can trigger targeted assessments, tabletop/BCP tests, and evidence requests without renegotiating the contract.

- You track findings to closure with time-bound remediation and verify fixes against live systems.

High-criticality vendors are your weakest link

Your biggest providers amplify risk because they sit in the middle of many critical or important processes. DORA expects you to quantify and control concentration risk - can you substitute them, move data, and keep operating if they fail? Treat this as a business-continuity problem with technical proof, instead of as a sourcing preference.

Cloud concentration risk and systemic dependencies

Article 29 requires a preliminary assessment of ICT concentration risk before you add or expand a provider: substitutability, portability, and the blast radius if the service fails. EU supervisors have doubled down on this, highlighting the dependency on a small set of hyperscalers and the need for realistic exit and fallback options. Build, test, and document those paths now.

Why your biggest providers (AWS, Azure, Stripe, OpenAI) need the deepest scrutiny

The bigger the vendor, the deeper your review should go. These providers have access to your crown jewels, and their security directly impacts yours.

For cloud providers, this means:

- Reviewing IAM configurations and permissions

- Testing network segmentation and security groups

- Validating encryption implementation

- Verifying backup and recovery procedures

For payment processors:

- Testing API security and authentication

- Validating transaction integrity controls

- Verifying fraud detection mechanisms

For AI providers:

- Reviewing data handling and privacy controls

- Testing for prompt injection vulnerabilities

- Validating output filtering and security boundaries

How to run deep vendor security reviews that actually reduce risk

Forget your checklist. Deep vendor reviews require technical validation, threat modeling, and hands-on testing.

Beyond SOC 2 and ISO

Your biggest providers amplify risk because they sit in the middle of many critical or important processes. DORA expects you to quantify and control concentration risk - can you substitute them, move data, and keep operating if they fail? Treat this as a business-continuity problem with technical proof, instead of as a sourcing preference.

Validate controls at the engineering level (auth, encryption, IAM)

Ask for short and targeted walkthroughs with the vendor’s engineers. Review how identities and data are handled, not just the policy text. Focus on:

- Authentication & sessions: Supported methods (SAML/OIDC, mTLS), token lifetimes, refresh behavior, device posture signals, session revocation.

- Authorization & scopes: RBAC/ABAC models, least-privilege presets, per-scope rate limits, guardrails for dangerous actions (refunds, key rotation, export).

- Encryption: Data-in-transit (protocols, ciphers, TLS pinning options), data-at-rest (KMS, key ownership/rotation), field-level or envelope encryption for sensitive fields.

- Secrets & keys: Where service credentials live (HSM/KMS, vault), rotation SLAs, just-in-time credentials, audit trails for retrieval.

- Isolation: Tenant isolation model, noisy-neighbor tests, egress filtering, dedicated runtime or VPC peering options for higher assurance.

- Change & release: Signed builds, isolated runners, approval gates for high-risk changes, rollback plans, and how emergency fixes are verified.

- Monitoring & incident flow: What gets logged (identity, API, workload), how fast you’re notified, evidence packages you can request, and who you page at 2 AM.

Example checklist for SaaS vendor deep-dive reviews

Here's what a real deep-dive review includes:

Authentication & Authorization

- Test password complexity enforcement

- Verify MFA implementation and bypass attempts

- Check for session fixation vulnerabilities

- Test API authentication mechanisms

- Validate JWT implementation and signing

Data Protection

- Verify encryption at rest implementation

- Test encryption in transit (TLS versions, cipher suites)

- Validate key management procedures

- Check for data leakage in responses

- Test data retention and deletion

Infrastructure Security

- Review network segmentation

- Test for container escape vulnerabilities

- Validate cloud security configurations

- Check for exposed management interfaces

- Verify backup encryption and integrity

Threat modeling vendors like your own systems

Treat each vendor as another subsystem in your architecture. If their component fails or is abused, where can an attacker go next?

Mapping vendor data flows and integration points into your architecture

Start by mapping how data flows between your systems and the vendor's. This reveals attack paths and trust boundaries that questionnaires miss.

Key questions to answer:

- What data is shared with the vendor?

- How is authentication handled between systems?

- Where is shared data stored and processed?

- What happens if the vendor is compromised?

Identifying joint attack surfaces

The most vulnerable points are where your systems connect. Focus your security testing here:

- APIs: Test for injection vulnerabilities, broken authentication, and excessive data exposure.

- Shared Identities: Validate SSO implementations, federation security, and token handling.

- Webhooks: Verify signature validation, replay protection, and error handling.

- File Transfers: Test for path traversal, malicious file uploads, and metadata leakage.

Testing vendors without breaking relationships

Rigorous security testing doesn't have to damage vendor relationships. Frame it as risk reduction for both parties, and not an adversarial audit.

Secure code review for vendor SDKs and integrations

Anything you install or link is your attack surface.

- Pin and verify: Lock SDK versions, verify signatures or checksums, and block auto-update in prod.

- Auth handling: Inspect how the SDK stores tokens, refreshes them, and retries on failure; ensure no tokens hit logs.

- Error handling: Make sure the code fails closed on permission errors and rate limits; add explicit backoff.

- Telemetry: Emit call metadata (who, what, when), correlate with vendor logs, and alert on unusual methods or volumes.

- Secrets: Pull creds from your vault at runtime, rotate on schedule, and ban local env files in CI/CD.

Red-team shared workflows and API interactions (in a safe way)

Run scenario tests in the vendor’s sandbox or a ring-fenced tenant you control. Agree the scope and success criteria up front.

- Abuse valid access: Try over-privileged scopes, privilege escalation via role grants, and cross-tenant data access attempts.

- Transaction safety: Attempt replay, double-spend, or idempotency bypass on state-changing endpoints.

- Exfil paths: Trigger large exports, long-running queries, or unusual filters; confirm alerts fire and throttles work.

- Session hardening: Test token theft resistance (short lifetimes, binding), forced logout, and admin approval gates.

- BCP exercises: Kill the integration token, rotate keys, or simulate a vendor outage; measure detection, failover, and recovery time.

What you keep as evidence

- Test plans, vendor approvals, and timestamps.

- Before/after configs and access grants.

- Logs from both sides with correlated IDs.

- Findings, fixes, and a retest record.

How to operationalize deep vendor reviews

Deep vendor reviews only work if they’re built into how your teams ship software. If reviews live in spreadsheets and ad-hoc meetings, you’ll miss risk signals, burn time on manual checks, and struggle to prove oversight under DORA. The fix is operational: automate the signals, wire reviews into engineering workflows, and report outcomes in a way leadership and regulators trust.

Automate vendor oversight so you see risk as it happens

Annual reviews won’t catch a leaked key or a new critical CVE. You need continuous signals tied to the vendors that power your critical functions, with clear owners and thresholds for action.

Continuous monitoring for vendor risk signals

Stand up always-on feeds that map to each vendor and integration. Track identity, code, and infrastructure signals that indicate real exposure, not just noise. Route alerts to the owning team with runbooks for contain→investigate→remediate.

Operational outcomes to target

- Coverage: 100% of critical vendors mapped to signal sources (credentials, code, infra, data).

- Detection: Alerts within minutes for leaked tokens, new critical vulns, or permission changes.

- Evidence: Auto-collected logs (who/what/when) packaged for incident and audit use.

Using AI-driven review tools to cut manual analysis

AI can read vendor docs, extract control claims, compare against your standards, and flag gaps. It can also correlate logs across your estate and the vendor’s APIs to surface anomalies. Keep humans in the loop for risk decisions.

Where AI helps

- Summarizing SOC/ISO appendices into control matrices with missing-evidence flags.

- Classifying alerts (false positive, real risk, business impact) using historical context.

- Generating follow-up questions and evidence requests from your review template.

Guardrails

- Require human approval for risk ratings and exceptions.

- Store AI outputs with sources; no black box findings.

- Retrain on confirmed outcomes so noise drops over time.

Build vendor reviews into engineering workflows

Security reviews shouldn't be isolated from engineering processes. Integrate them into existing workflows for better adoption.

Standardized review templates for vendor onboarding

Make the first conversation with a vendor productive and repeatable. Your template should capture the minimum evidence to assess risk and set expectations for ongoing monitoring.

Template essentials

- Integration scope: data classes, actions the vendor can perform, required API scopes.

- Identity & access: SSO method, role model, token lifetimes, break-glass controls.

- Encryption & keys: KMS ownership, rotation cadence, customer-managed keys support.

- Logging & evidence: APIs for audit logs, schemas, retention, time-to-evidence SLA.

- Change & incident: release process, signed artifacts, notification triggers, contact paths.

- Sub-outsourcing: list of subprocessors; inheritance of controls and logging.

Integrating vendor assessments into CI/CD pipelines and threat modeling sessions

Treat vendor code and connections like any other change to production.

Actions to bake in

- Dependency gates: Allowlist vendor SDK versions; block unsigned or auto-updating packages.

- Secrets discipline: Pull vendor creds from your vault at runtime; rotate on schedule; ban plaintext in CI.

- Scope tests: Unit/contract tests that fail builds if OAuth scopes exceed the approved set.

- API contract tests: Idempotency, pagination, and rate-limit behavior validated in sandbox.

- Threat modeling in sprints: Add vendor data flows and identities to feature-level models; document mitigations in the ticket.

Report in board language and map to DORA without extra work

Leadership needs clarity on exposure and progress. Supervisors need proof of control effectiveness. Build reporting off the same data you use to operate, then frame it for each audience.

Converting technical findings into board-ready metrics

Create metrics that matter:

- % of critical vendors with continuous telemetry and time-to-evidence ≤ 24 hours.

- % of integrations running least-privilege scopes; number of scope reductions this quarter.

- MTTD/MTTR for vendor-originated incidents; trend vs. last quarter.

- Exit readiness: # of vendors with tested failover/exit runbooks in the last 12 months.

- Open vs. remediated findings by severity, with SLA performance by owning team.

These metrics should show progress because leadership needs to see how vendor security impacts business risk.

Demonstrating defensible oversight under DORA

Document your vendor security program to satisfy DORA requirements:

What your audit pack should include

- Current vendor register with critical/important designations and rationale.

- Contracts with rights to logs, testing, audit, sub-outsourcing visibility, and exit.

- The last 12 months of evidence pulls: log samples, test results, tabletop outcomes.

- Closed-loop records: findings, owners, deadlines, retest proofs.

- Scenario testing notes that include vendor failure modes and recovery timings.

Stop pretending paperwork protects you

What matters now is showing that you understand your dependencies at a technical level and can prove you’ve built defenses around them. Done right, deep vendor reviews reduce the chance of cascading failures, cut the cost of incident response, and give your board and regulators confidence that you’re in control.

Your ability to manage third-party risk is now a board-level and regulatory expectation, instead of just another security best practice.

And if you want a head start, SecurityReview.ai maps vendor risks directly to DORA compliance requirements, turning technical findings into regulator-ready reports. That way, you can focus on real risk reduction while proving defensible oversight.

Keep up with attackers and auditors, and start looking deeper at your vendors now.

FAQ

What is DORA and why does it matter for vendor risk management?

DORA (Digital Operational Resilience Act) is an EU regulation that requires financial institutions to demonstrate resilience across their technology supply chains. It matters for vendor risk management because it shifts the expectation from checking certifications to proving that critical vendors are secure at a technical level.

How does DORA change traditional vendor risk management practices?

Traditional programs often rely on questionnaires, SOC 2 reports, or ISO certifications. Under DORA, this is not enough. Firms must continuously monitor vendors, validate technical controls like authentication and encryption, and prove they can recover if a vendor fails.

What is the difference between compliance checks and security assurance under DORA?

Compliance checks verify whether a policy or certification exists. Security assurance under DORA means showing that vendor controls work in practice and that your organization can detect, respond, and recover from third-party incidents.

What is the business value of deep vendor reviews under DORA?

The main benefits are reduced risk of cascading vendor failures, faster incident response, lower regulatory penalties, and stronger board-level confidence. Deep reviews also help avoid costly disruptions caused by supply chain attacks.

What does a “deep” vendor security review look like?

A deep review validates controls at the engineering level. It includes checking how the vendor handles authentication, authorization, encryption, tenant isolation, monitoring, and incident response. It also involves mapping vendor data flows into your systems and testing high-risk integration points.

How should organizations threat model their vendors?

Treat vendors as part of your architecture. Map data flows, APIs, and shared identities. Identify where attackers could move between your environment and the vendor’s. Then define controls such as least-privilege scopes, audit logging, and anomaly detection to reduce exposure.

How often should vendors be reviewed under DORA?

DORA expects continuous oversight, not annual reviews. That means real-time monitoring for leaked credentials, new vulnerabilities, or vendor-originated anomalies. Critical vendors require the highest frequency of technical assurance.

Which vendors need the most scrutiny under DORA?

Vendors that underpin critical or important functions such as cloud providers (AWS, Azure, GCP), SaaS platforms, payment processors, and AI providers. These represent systemic risk and require deeper contractual rights, technical validation, and tested exit strategies.

What does DORA say about concentration risk in cloud and SaaS?

DORA requires institutions to assess and mitigate ICT concentration risk. This means evaluating substitutability, portability, and the systemic impact of relying on a small number of providers. Regulators expect tested fallback and exit plans.

What should security leaders do next to prepare for DORA vendor oversight?

Review whether current vendor assessments go beyond paperwork. Prioritize deep reviews for high-criticality providers. Automate continuous monitoring for risk signals. Align contracts with Article 30 requirements for audit, evidence, and sub-outsourcing. Build defensible reporting that shows oversight and resilience.

.png)